Hi, I’m Azmat Hussain

a

Generative AI Specialist

ML Engineer

BI Developer

Technical PM

As an AI and Robotics engineer with strong hands-on experience in Edge AI, IoT, computer vision, and embedded systems, I specialize in developing and deploying intelligent real-time solutions. I have led R&D teams in building robotics platforms, UWB-based tracking systems, autonomous agricultural robots, and AI-driven analytics tools, working with TensorFlow, PyTorch, ROS, Jetson devices, and integrated sensor networks. My work blends software–hardware expertise, model optimization, and practical deployment, supported by successful project delivery and published research. I am now seeking opportunities where I can apply my technical depth and innovation-focused mindset to build advanced AI, robotics, and automation solutions.

What I Do

AI Engineer

As an AI Engineer, I design and build intelligent systems that solve real business problems. I collect and clean data, choose and train suitable models (from classic ML to deep learning), evaluate them with clear metrics, and turn prototypes into usable tools or APIs. I explain results in simple terms, ensure data privacy and testing, and work with teams to integrate models into products so clients get reliable, useful solutions.

Generative AI Engineer

As a Generative AI Engineer, I create and fine-tune models that generate text, images, or audio to meet client needs. I prepare training and prompt datasets, craft effective prompts, and tune models for quality and cost. I add safety filters and guardrails, deliver demos, and integrate generation into apps via APIs. I help clients automate content, produce creative assets, summarize information, and rapidly prototype new product ideas.

Agentic AI Engineer

As an Agentic AI Engineer, I build autonomous agents that plan, act, and adapt to complete multi-step tasks on behalf of users. I design the agent’s decision loops, connect tools and APIs, set safety rules and human-in-the-loop checkpoints, and test agents in safe sandboxes. I monitor behavior, log actions, and refine strategies so agents are reliable and aligned. I help clients automate complex workflows, research tasks, and orchestrate multi-service processes safely.

My Portfolio

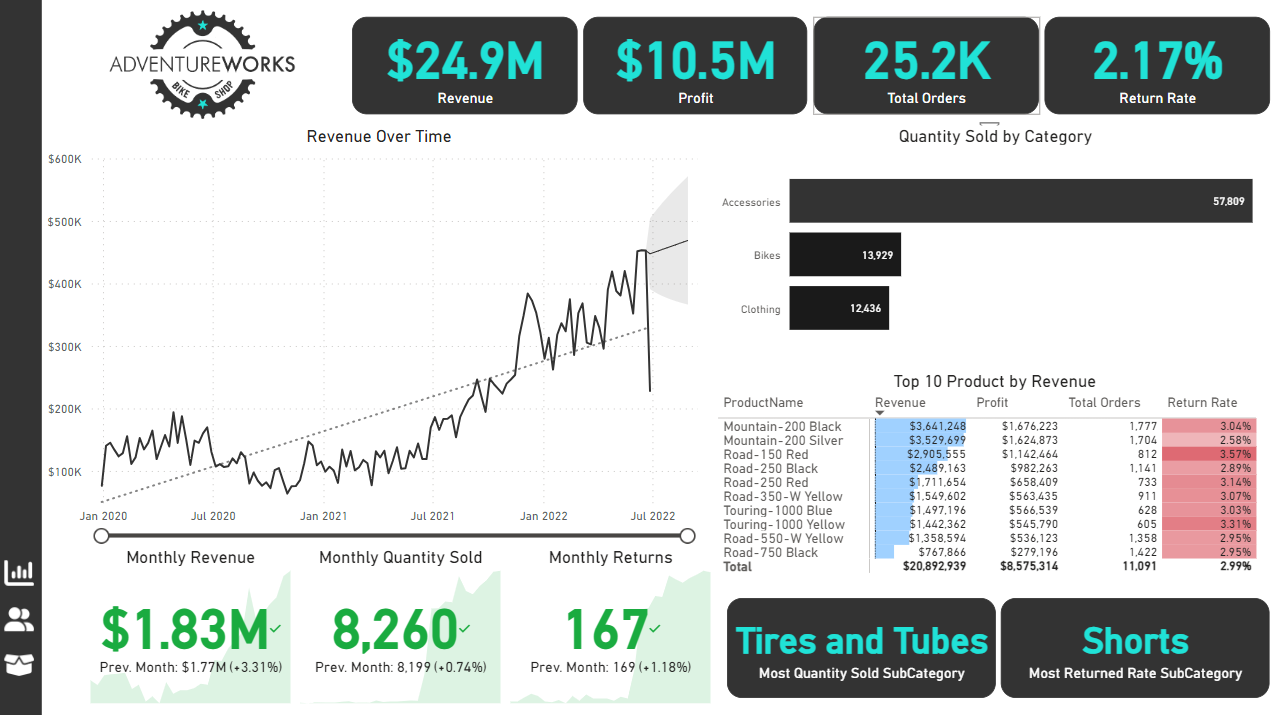

The Situation:

Adventure Works is a fictional global manufacturing company that produces cycling equipment and accessories, with activities stretching across three continents (North America, Europe, and Oceania). Our goal is to transform their raw data into meaningful insights and recommendations for management. More specifically, we need to:

- Track KPIs (sales, revenue, profit, returns)

- Compare regional performance

- Analyse product-level trends

- Identify high-value customers

The Data:

We’ve been given a collection of raw data (CSV files), which contain information about transactions, returns, products, customers, and sales territories in a total of eight tables, spanning from the years 2020 to 2022.

The Task: We are tasked with using solely Microsoft Power BI to:

- Connect and transform/shape the data in Power BI’s back-end using Power Query

- Build a relational data model, linking the 8 fact and dimension tables

- Create calculated columns and measures with DAX

- Design a multi-page interactive dashboard to visualize the data in Power BI’s front-end

The Process:

1. Connecting and Shaping the Data

Firstly, we imported the data into the Power Query editor to transform and clean it. The next process involved:

Removing Duplicates: Duplicate entries were removed from the dataset to ensure accurate analysis.

Handling Null or Missing Values: For some columns, missing values were replaced with defaults or averages. Null values in “key” columns were removed using filters.

Data Type Conversion: Columns were converted to appropriate data types to ensure consistency. Dates were converted to Date type, numerical columns to Decimal or Whole Numbers, and text columns to Text.

Column Splitting and Merging: Several columns were split to separate concatenated information, or merged to create a unified name (such as Customer Full Name).

Standardising Date Formats: All date columns were formatted consistently to facilitate time-based analysis. This step was important for ensuring accurate time-series analysis in Power BI.

Removing Unnecessary Columns: Irrelevant columns were removed to streamline the dataset. This helped focus the analysis on relevant information, reducing memory usage and improving performance.

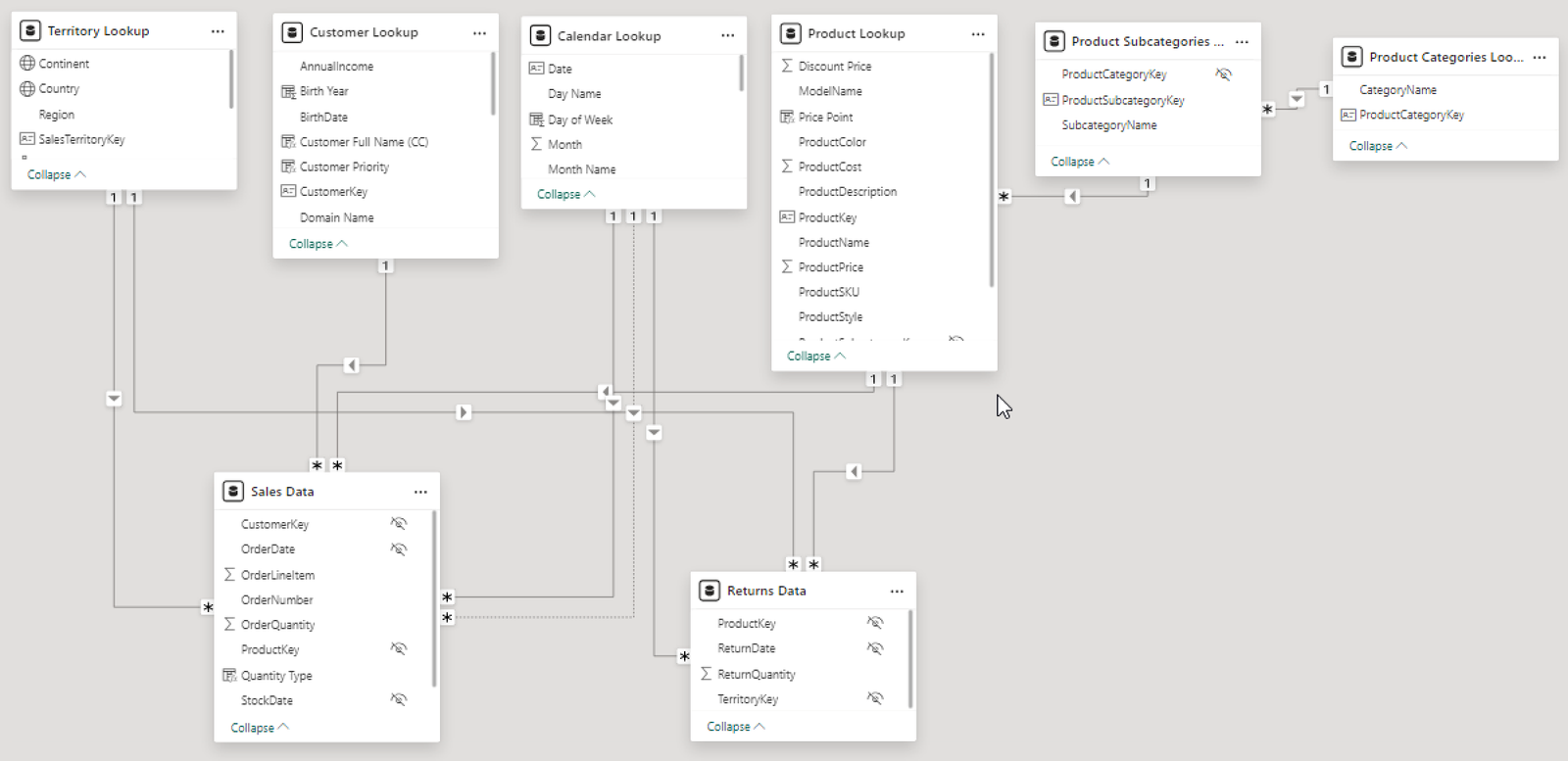

2. Building a Relational Data Model

Secondly, we modeled the data to create a snowflake schema. This process involved creating relationships between the dimension and fact tables, ensuring cardinalities were one-to-many relationships.

Enabling active or inactive relationships, creating hierarchies for fields such as Geography (Continent-Country-Region) and Date (Start of Year-Start of Month-Start of Week-Date), and finally hiding the foreign keys from report view to ease the data analysis and visualization steps and reduce errors.

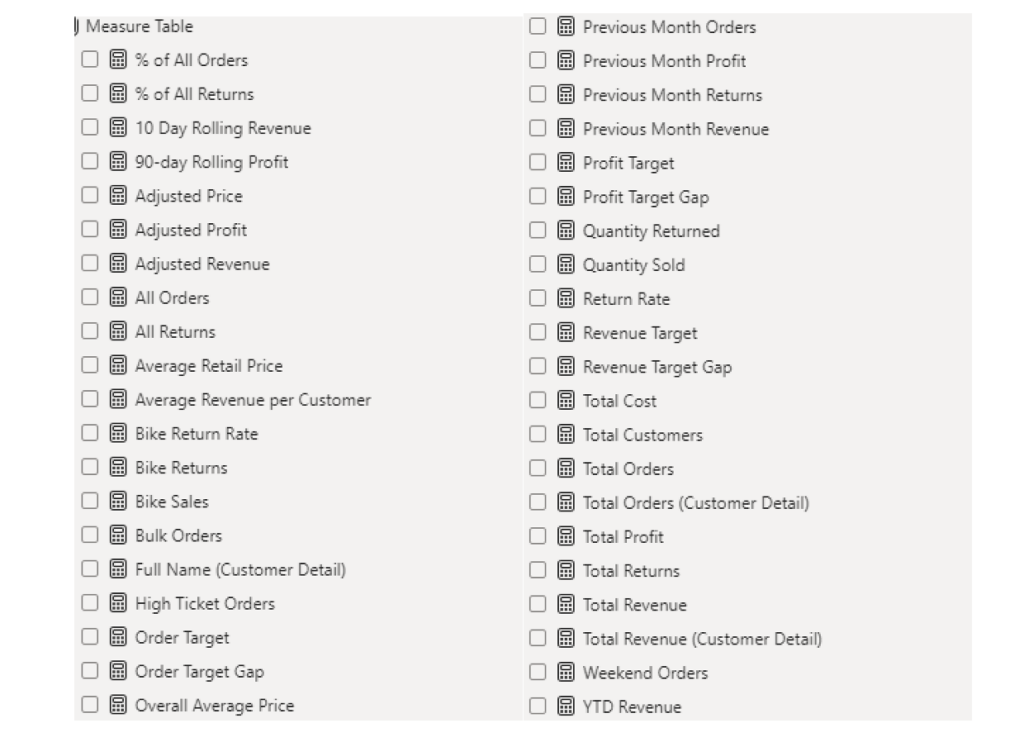

3. Creating Calculated Columns and Measures

Next, we used Power BI’s front-end formula language, DAX, to analyze our relational data model and create several calculated columns (for filtering) and measures (for aggregation), that we could later reference and use when analyzing and visualizing the data.

We used calculated columns to determine whether a customer is a parent (Yes/No), a customer’s income level (Very High/High/Average/Low), a customer’s priority status (Priority/ Standard), and the customer’s educational level (High School/ Undergrad/ Graduate).

The list of calculated measures is available below and includes key information on revenue, profit, orders, returns, and more.

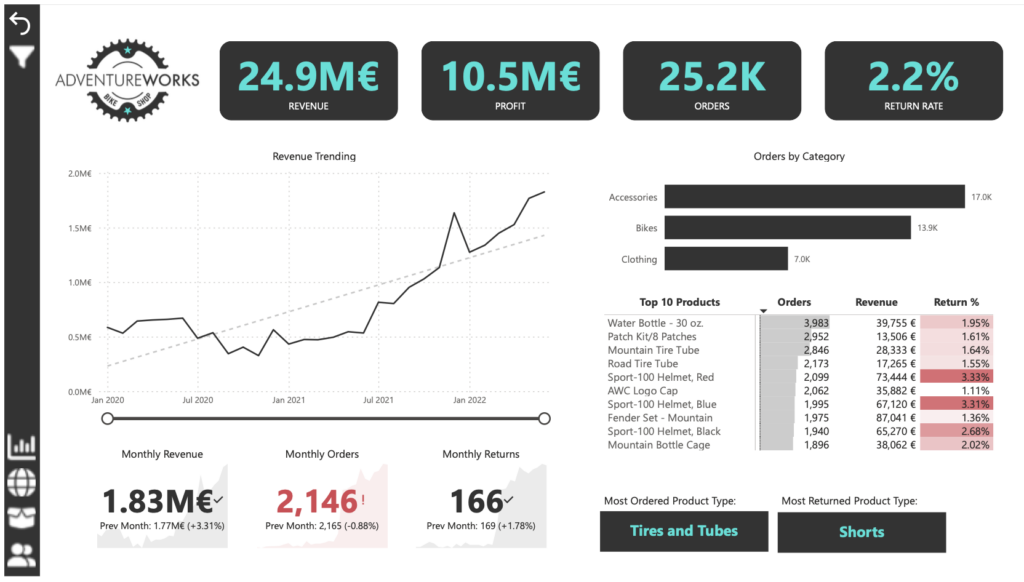

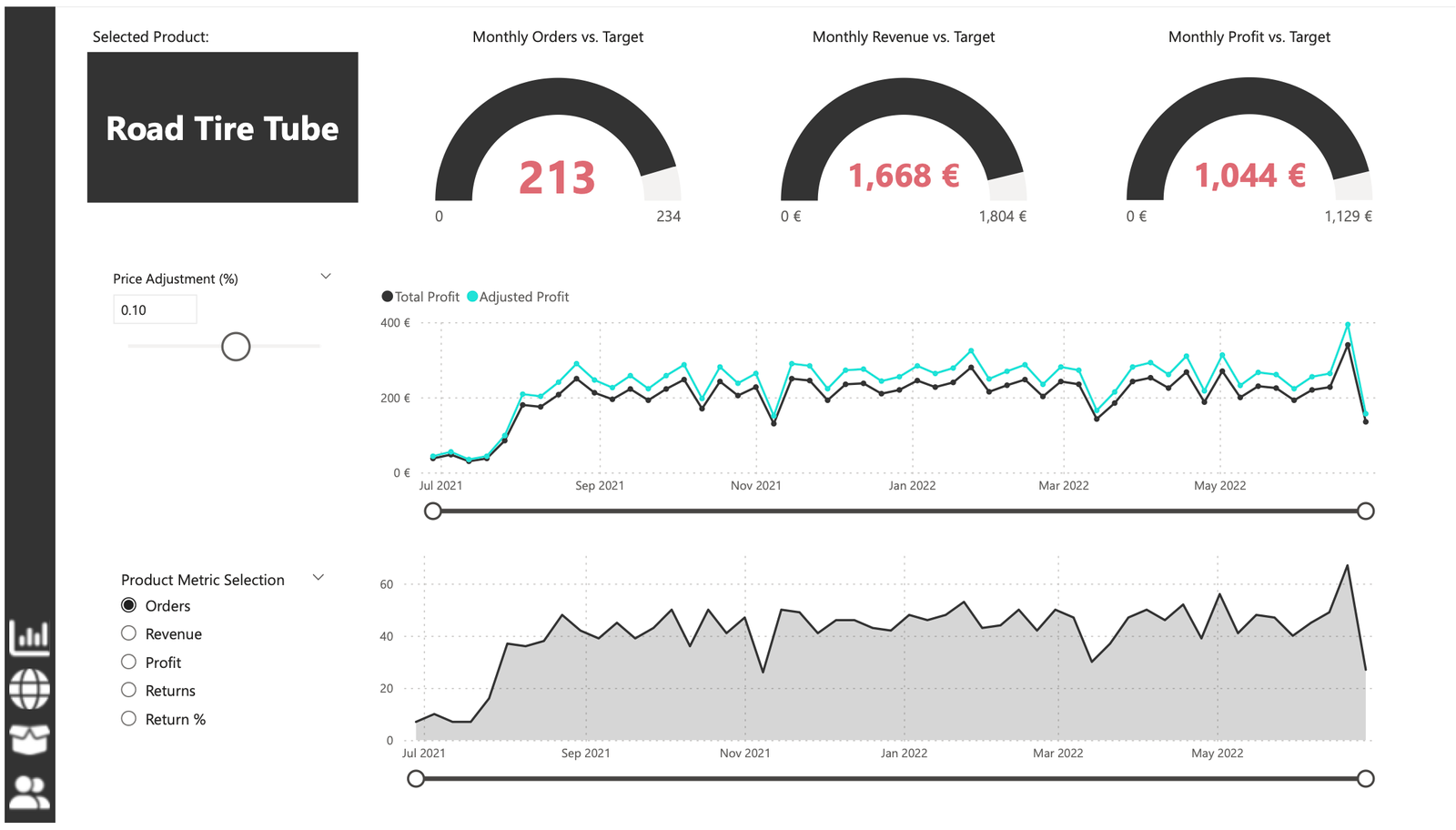

4. Visualising the Data

The final step of the project was creating a multi-page interactive dashboard, including a range of visuals and KPIs that could serve management and lead to informed decision-making. We used several visuals and tools to demonstrate and visualize the data across the 4 report pages, including KPI cards, line and bar charts, matrices, gauge charts, maps, donut charts, and slicers. We made sure the report was fully interactive and simple to navigate, with icons used to enable filters, cancel filters, and guide users to each report page with ease. Features such as drill-through, bookmarks, parameters, and tooltips were also used throughout the dashboard, further enhancing its usefulness and impact on management.

Executive Dashboard: The first report page provides a high-level view of Adventure Works’ overall performance. We used card visuals to present Key Performance Indicators such as overall revenue, profit margins, total orders, and return rates. We also included additional cards to compare current and previous month performances, providing insights into recent trends, a line chart to visualize the trending revenue from 2020-2022 and highlight long-term performance, and presented the number of orders by product category to aid in understanding product sales distribution, and used a further table to display the top 10 products based on key indicators (total orders, revenue, and return rate).

Map: The second report page consisted of a map visual, an interactive representation of sales volume across different geographical locations. This offered insight into Adventure Works’ global sales distribution and worldwide reach.

Product Detail: The third report page focuses on detailed product-level analysis. It displayed detailed product information for the selected top 10 products from the Executive Dashboard, using the drill-through feature. It also included gauge charts presenting actual performance vs target performance of monthly orders, revenue, and profit, and included an interactive line chart to visualize potential profit adjustments when manipulating the price of the product, aiding in strategic decision-making regarding pricing strategies. This report page also included a line chart including key weekly product information on total orders, revenue, profit, returns, and return rate.

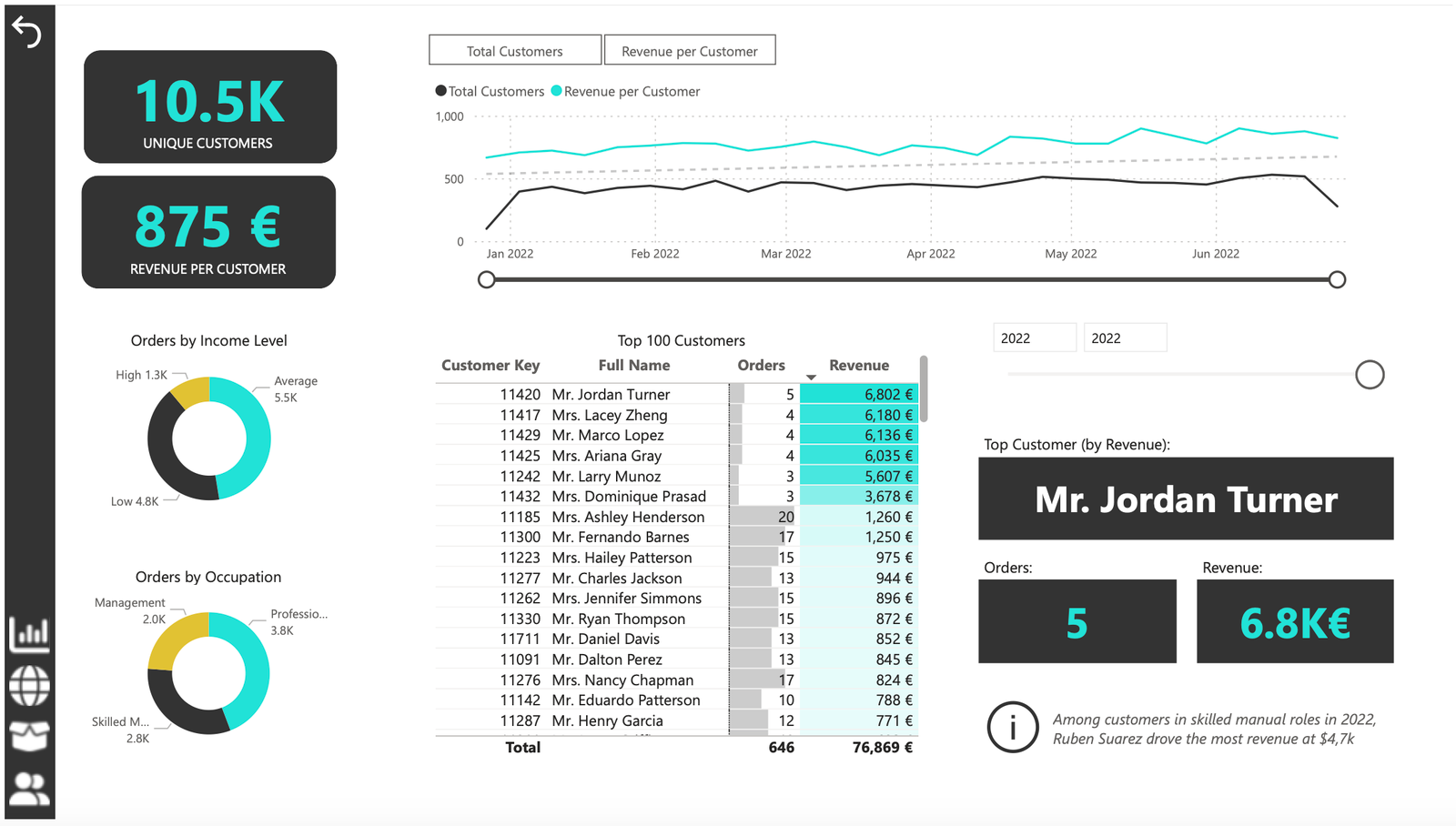

Customer Detail: The fourth and final report page provided a deeper insight into customer behavior and value. It used donut charts to break down customer groups into income level and occupation categories vs. total orders, helping in customer segmentation tactics, and used a matrix aided by KPI cards to identify high-value customers based on order and revenue contributions, aiding in identifying high-value customers and sales opportunities.

My Resume

Experience Background

Technical Project Manager - R&D and Product Innovation

TPL Trakker Ltd. (Apr 2024 - Present)- Led the R&D team in developing AI-driven automation solutions for industrial applications, specializing in the integration of IoT, Edge AI, and embedded systems to create efficient and scalable software-hardware solutions. - Developed an Audience Engagement Analyzer, leveraging computer vision and heatmap analytics to measure audience interaction with billboards, providing data-driven insights for targeted advertising. - Spearheaded creation of a SaaS platform, contributing to both frontend and backend development of the uwb based Indoor Asset Tracking application. - Designed & implemented remote sensing solutions in agriculture, expanding hands-on expertise working with advanced sensor. - Managed cross-functional teams to integrate software and hardware components, ensuring successful development and seamless operation of the embedded and cross-platform applications.

Technical Lead

National Center of Artificial Intelligence - SCL, NED UET (Jul 2021 - Mar 2024)- Led software-hardware teams and significantly contributed in the development of Robotics & IoT projects like Weeding Robot, Jazari - Humanoid Robot, Reverse Vending Machine, and IoT Devices, demonstrating proficiency in AI, robotics and embedded development. - Contributed to driverless car project, focusing on LiDAR & tracking/depth sensors, using ROS for sensor fusion enhancing vehicle’s spatial awareness, navigation precision, & object detection capabilities. - Gained working familiarity with prominent edge computing platforms like (Xavier AGX, Jetson Nano) for low power AI applications. - Conducted thorough testing and debugging, identifying and resolving issues promptly.

Research Assistant

Fab Lab - Electrical Engineering, Sukkur IBA University (Aug 2019 - Jun 2021)- Developed IoT solutions like IoT energy metering and LoRa based SoS alarm device showcasing expertise in python, C programming, embedded systems, & IoT. Mentored students on projects like quadcopters & BMS, ventilator, showcasing mastery in embedded system development & debugging.

Education Background

MS in Artificial Intelligence

NED University of Engineering & Technology (Feb 2024)Courses: Machine Learning, Deep Learning, Advanced AI, Intelligent System Design

Principles & Applications of Digital Fabrication

Fab Academy, Fab Foundation (Jun 2019)Skills: Project Management, 3D Printing, Embedded Programming, Version Control, App/Web Dev

BE in Electrical & Electronics Engineering

Dept of Electrical Engineering, Sukkur IBA University (Dec 2018)Courses: Intro to Robotics, Digital System Design, Control Systems, OOP, Digital Signal Processing

Soft Skill

Leadership & Strategic Planning

Training & Development

Teamwork & Coordination

Recruiting & Onboarding

Communication & Presentation

Creativity & Innovation

Adaptability & Continuous Learning

Project Management & Time Management

Technical Skill

PYTHON

POWER BI

STRUCTURED QUERY LANGUAGE (SQL)

MACHINE LEARNING

DEEP LEARNING

PYTORCH / TENSORFLOW

NEURAL NETWORKS (ANN / CNN / RNN)

GENERATIVE AI / LLMS

LANGCHAIN

Certifications

Professional Generative AI Specialist Certification Program

Analytix Camp (May 2025 – Sep 2025)1. Python for Data Science and Analytics: I use Python with Pandas and NumPy to Collect, Clean, and Transform Data, perform Exploratory Data Analysis (EDA), and build reproducible analysis scripts and visual prototypes. 2. SQL for Data Extraction and Manipulation: I write efficient SQL queries to Extract, Join, and Aggregate data from relational Databases, supporting reliable Data Pipelines and Model Training. 3. Foundations of Artificial Intelligence: I understand core AI concepts, problem formulation, and ethical considerations, which help me choose the right methods and design responsible AI solutions. 4. Machine Learning: I build, Evaluate, and Tune Machine Learning models (Regression, Trees, Clustering), perform Feature Engineering, and use Cross-Validation to deliver accurate, actionable predictions. 5. Deep Learning: I design and train Deep Learning models for complex tasks, manage optimization and regularization, and apply these models to problems in vision, text, and tabular data. 6. Neural Networks (ANN / CNN / RNN): I implement ANN, CNN, and RNN architectures, adapt them to task needs, and interpret model behavior for real-world applications in image, sequence, and general prediction tasks. 7. LSTM (Long Short-Term Memory): I build and tune LSTM models for time series and sequential data, applying them to forecasting, anomaly detection, and sequence prediction problems. 8. Large Language Models (LLM): I work with LLMs to Perform Understanding and Generation tasks, fine-tune models for domain needs, and evaluate outputs for quality and safety. 9. Hugging Face Ecosystem: I use Hugging Face tools for Tokenization, Fine-Tuning, and Model Serving, preparing datasets and deploying transformer-based solutions efficiently. 10. LangChain and RAG (Retrieval-Augmented Generation): I build RAG systems and agent workflows with LangChain, connecting Vector Stores and Retrieval Pipelines to improve accuracy and provide up-to-date answers. 11. Generative AI Tools: I apply Generative AI tools and Prompt Engineering to create text, images, and multimodal outputs, while implementing Safety Filters and output quality controls. 12. Streamlit for Prototyping and Demos: I build interactive web apps with Streamlit to Showcase models, create user-friendly demos, and let stakeholders explore model outputs live. 13. Power BI for Reporting and Dashboards: I combine model results with business data in Power BI to create interactive Dashboards and Reports that help stakeholders explore insights and make data-driven decisions. Verification Link: Azmat Hussain Certification - Analytix Camp

Google Project Management - Professional Certificate

Google Career Certificates at Coursera (2025)

Deeplearning.AI Tensorflow Developer - Professional Certificate

Deeplearning.AI at Coursera (2025)

C Programming with Linux - Specialization

Mines-Télécom & Dartmouth at Coursera (2023)

Robotics: Aerial Robotics - Specialization

University of Pennsylvania at Coursera (2022)

Developing Industrial IoT - Specialization

University of Colorado Boulder at Coursera (2020)

Testimonial

Muhammad Abbas

Chief Executive OfficerGenerative AI Project Development

via Fiverr - May, 2025 - Sep, 2025I am pleased to commend Azmat Hussain for his exceptional dedication and achievements. He consistently demonstrates a strong work ethic and a genuine enthusiasm for learning, making a positive impact on our academic environment. His proactive approach to challenges and unwavering commitment to excellence are truly commendable. Azmat Hussain is not only a high achiever academically but also a supportive and collaborative member of our community. His accomplishments inspire his peers and reflect his potential for continued success in all future endeavors.

Contact With Me